Contents

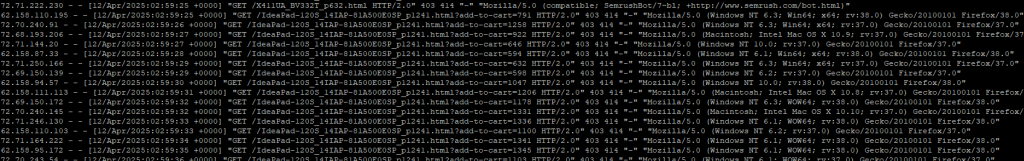

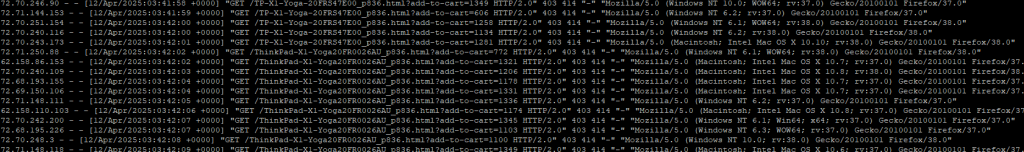

Web servers are constantly bombarded by traffic from a variety of sources, including legitimate users, bots, and scrapers. While some bots are useful (like search engine crawlers), others can be a nuisance. Some malicious bots masquerade as regular browsers using outdated user-agent strings, such as Gecko/20100101 Firefox/38.0. These requests can overwhelm a server, consuming resources and slowing down performance. In this post, we’ll explain how these fake user-agents can harm server performance and how blocking them can help optimize your server’s efficiency.

The Problem: Fake User-Agents and Malicious Bots

What Are Fake User-Agents?

User-agent strings are identifiers sent by browsers to web servers, telling the server what browser or tool is making the request. Legitimate browsers like Firefox, Chrome, and Safari send unique user-agent headers to help web servers respond with compatible content.

However, malicious bots and scrapers can spoof these user-agent strings to make their requests appear as though they’re coming from legitimate browsers. One common tactic is using outdated browser versions, like Gecko/20100101 Firefox/38.0, to disguise their identity and avoid detection.

Such fake user-agents are often a sign of bots, not real users, and they can severely impact server performance.

The Impact of Fake User-Agents on Your Server

Fake user-agent traffic, especially from bots using outdated or generic identifiers like Gecko/20100101 Firefox/38.0, can cause several problems:

- Overloaded Server Resources: Malicious bots often send numerous requests, consuming server CPU and memory. This can result in increased load times, slower response times, and potential crashes if the server is not properly managed.

- Inefficient Crawling: Bots often make repeated requests for the same resources. This unnecessary traffic clutters your server logs, increases bandwidth consumption, and adds to the load without offering any real benefit to the site owner.

- Risk of Scraping: Bots masquerading as regular browsers can be used for scraping content. They may extract product data, blog posts, or even sensitive information without your permission, leading to intellectual property theft or even competitive advantage loss.

The Solution: Blocking Fake User-Agents with .htaccess

Why Block Fake User-Agents?

Blocking fake user-agents is an effective way to reduce the load caused by unwanted bot traffic. Since these requests are not from legitimate users, they can be safely denied access to your server, freeing up resources for real visitors.

By blocking specific user-agent strings, such as Gecko/20100101 Firefox/38.0, you can prevent these bots from making unnecessary requests and reduce server strain.

How to Block Fake User-Agents Using .htaccess

One of the easiest and most efficient ways to block fake user-agents is by editing the .htaccess file. This file is used to configure Apache server settings, and you can use it to filter out unwanted traffic based on specific patterns like user-agent strings.

Here’s a simple method to block user-agents that mimic old browser versions, including the notorious Gecko/20100101 Firefox/38.0:

- Access the .htaccess File: First, log into your web hosting account and navigate to the root directory of your website. Find the

.htaccessfile. If it’s not there, you can create a new one. - Add the Blocking Rules: To block traffic from fake user-agents, add the following code to your

.htaccessfile:

# Block Fake Browsers and Bots

SetEnvIfNoCase User-Agent "Firefox/3[7-9]" bad_ua

SetEnvIfNoCase User-Agent "Gecko/20100101 Firefox/38.0" bad_ua

Deny from env=bad_ua

- SetEnvIfNoCase: This directive checks the

User-Agentheader in incoming requests, ignoring case. - User-Agent: Here, we are matching specific user-agent patterns, such as old Firefox versions (

Firefox/3[7-9]) and known bot disguises (likeGecko/20100101 Firefox/38.0). - Deny from env=bad_ua: Any request that matches the defined user-agent strings will be denied access and return a 403 Forbidden status.

Save and Test: Once you’ve added this code, save the .htaccess file. To ensure that your site is still accessible, test it by visiting your site in various browsers and using online tools like GTMetrix to check your server performance.

Why This Works: Understanding the Bot Behavior

Bots Behind Fake User-Agents

Many scrapers and bots use outdated user-agent strings like Gecko/20100101 Firefox/38.0 because they’re attempting to hide their true identity. These bots typically use scripting languages such as Python with libraries like Scrapy or Requests to send HTTP requests, which allow them to manipulate user-agent headers and impersonate legitimate browsers.

Blocking outdated user-agent strings is one way to identify and block these bots, as they often use these old strings to avoid detection by basic bot prevention mechanisms. By filtering out these requests, you’re essentially preventing a large chunk of automated, unnecessary traffic that would otherwise waste server resources.

Can’t Detect Every Bot? Not a Problem

While some sophisticated bots might disguise their user-agent strings even further, blocking old or suspicious user-agents is a simple yet effective first step. Additionally, combining this with other security measures like rate limiting, CAPTCHA challenges, and bot detection services can provide a comprehensive defense.

Additional Measures to Protect Your Server

While blocking fake user-agents will help mitigate some bot traffic, there are additional measures you can take to further secure your server and reduce load:

- Implement Rate Limiting: Limit the number of requests a single IP address can make within a specified time frame. This prevents bots from flooding your server with too many requests in a short period.

- Use a Web Application Firewall (WAF): Services like Cloudflare or Sucuri can help detect and block malicious bot traffic, providing an additional layer of protection against malicious crawlers.

- Monitor Server Logs Regularly: Keep an eye on your server logs to identify unusual patterns of traffic. Look out for spikes in requests with strange user-agent strings, IP addresses, or patterns of activity that resemble scraping attempts.

Conclusion

Blocking fake user-agent strings, such as Gecko/20100101 Firefox/38.0, can significantly improve your server’s performance by reducing unnecessary bot traffic. These requests, which often come from scrapers and malicious bots, consume server resources without providing any value. By implementing simple .htaccess rules to block these user-agents, you can free up your server’s CPU, bandwidth, and memory, resulting in faster load times and more stable server performance.

In a world where bot traffic is becoming increasingly sophisticated, combining this method with other security techniques will help protect your server from the damage caused by unwanted scrapers and improve your website’s overall performance.

If you’d like to learn how to effectively block AhrefsBot, SemrushBot, and other aggressive web crawlers from overloading your server, click here.